A new way to generate images from text

Stable Diffusion, an open-source AI text-image model, was recently released. It’s notable for being the first “big-boy” (i.e. high fidelity, state-of-the-art quality) model that is completely freely available.

If you haven’t already, check out my post about DALL-E 2 for some background on what the heck this AI art stuff even is.

Setup

Free Demo

There’s a free demo on huggingface, which is a great way to test it out. Although it can be ran from anywhere (even mobile), it’s slow since it’s running on a free tier, open queue.

Good for just expirementing but not for serious work.

Google Colab Notebook (web client)

If you don’t have or want to use a GPU or hardware to run the model yourself (or you’re not a nerd who can follow programming instructions), you can use the Google Colab notebook. This is a web-based client that lets you run the model in the cloud. It’s free, but you’ll need a Google account to use it.

There’s a few different notebooks out there, and will probably be more and more made. You should google “Stable Diffusion colab notebook” if these are out of date.

Local: UNIX setup for lstein/stable-diffusion

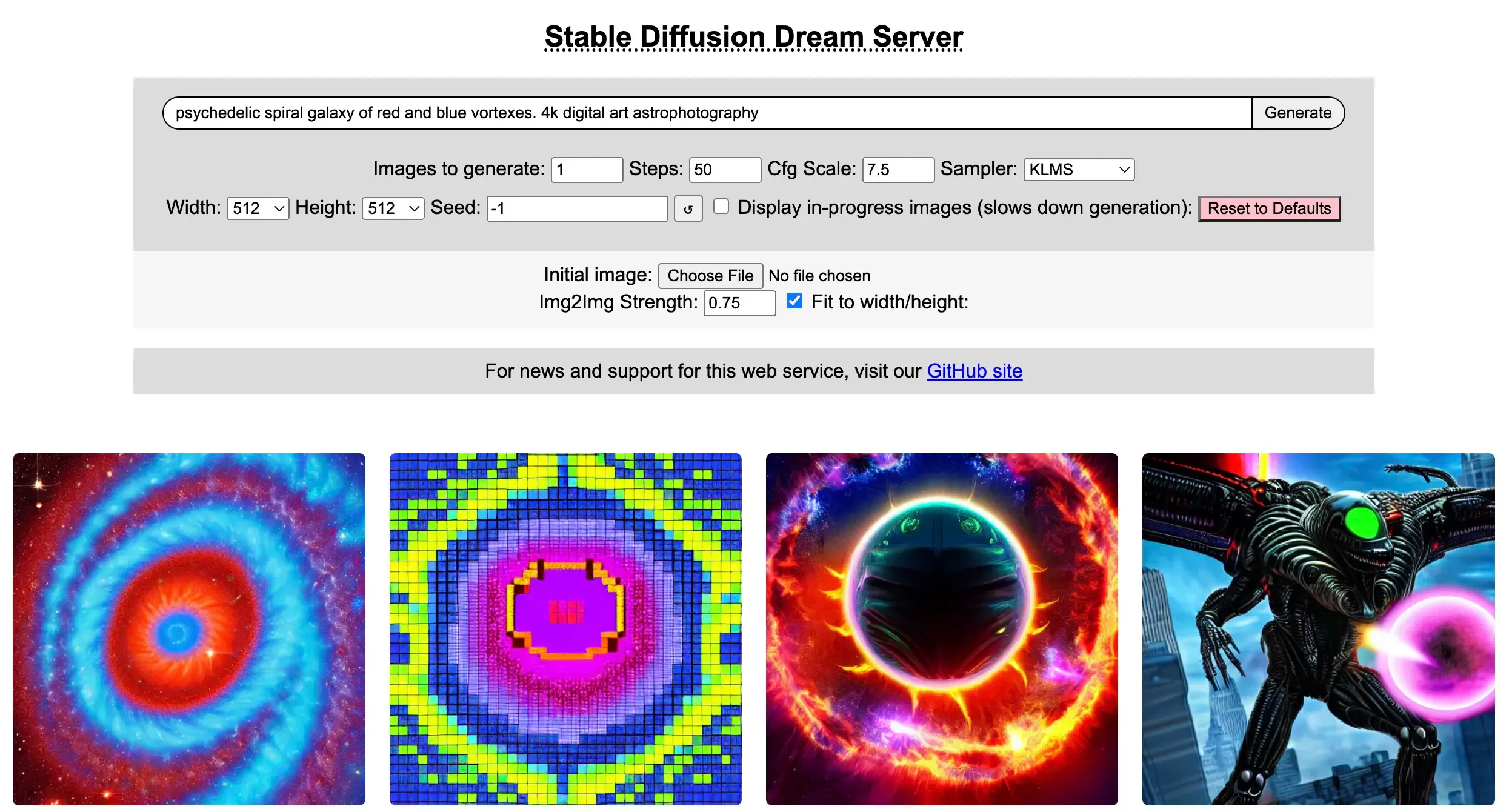

If you use an Apple Silicon Mac, you can use lstein’s fork of Stable Diffusion, which adds support for M1/M2 macs, as well as a very cool web interface that makes prototyping easy.

Just follow the setup instructions; they work as is. However, some of the scripts had problems running on my M1 macbook, so I just used the dream web interface instead:

# run the web interface

$ python3 scripts/dream.py --webResults

Check out all my stable diffusion results here. Here’s a sample: